News from Atol Solutions

Collecting Meaningful Data from the Web: Now a Reality

Web data is growing at a fast pace, and we can expect new Web technologies and tools to arrive on the scene in quick order. Also, as social media tools reach more and more users, organizations will need to continue digging ever further into the Web. Some traditional data integration applications are already supporting this type of functionality, or will be supporting it in the near future. We haven’t seen the last of this story. Stay tuned.

by Jorge García - December 2, 2010

Once upon a time, organizations would extract data from several types of data sources, including different business software applications such as enterprise resource planning (ERP) systems, customer relationship management (CRM) applications, and others. Data sources also included such documents as plain-text docs and even spreadsheets. The traditional way to extract data from these sources involved a data integration application that connected to data sources via an application programming interface (API), which enabled connection and communication between different types of databases or data sources.

However, with the recent explosion of Web content, along with the rapid evolution of software systems, other types of data extraction have become necessary. Organizations now require alternative ways to collect information coming from vast amounts of external Web pages, for such purposes as monitoring customer sentiment, measuring marketing results, and collecting Web content for research purposes.

But for this type of data extraction, companies may not have an API available, either because an API for this does not exist, or because the company simply does not have access to it. Also, some organizations need to extract information from their legacy systems, and may not have an API available for connection via traditional data integration applications.

Some software vendors are currently addressing these specific needs by offering Web data integration tools to collect data—bypassing the use of an API, and concentrating on collecting this information by taking advantage of Web-based protocols like extensible markup language (XML), hypertext markup language (HTML), JavaScript, or simple object access protocol (SOAP) (among other technologies) to extract this information. This task is by no means easy, since standardization of various Web technologies is required for extracting data and transforming it into a unique source of information.

The potential utility of this technology is huge. Some cases worth mentioning include the extraction of data from legacy systems, the extraction of information for data mining, and extraction of data for text and sentiment analysis.

Recently, two vendors of this type of technology came to my attention: Kapow Software and Connotate. These vendors may change the way we extract, transform, and integrate data from disparate sources.

Connotate

With·Agent Community, New Brunswick, New Jersey (US)-based Connotate provides a product suite that enables organizations to monitor, extract, transform, and integrate information from disparate internal and external Web-based sources. Agent Community was created for non-technical users to design and automate personalized Web data monitoring and extraction applications. Its customers include·McGraw-Hill,·The Associated Press, and·Thomson Reuters.

Agent Community uses a virtual set of process jobs called “intelligent agents” to monitor and collect Web content. These software agents can be customized?or “trained,” in Connotate’s lingo—using a simple point-and-click method to achieve real-time Web data monitoring and collection from internal and external sources. Agent Community uses a patented visual abstraction approach to help users easily identify individual data elements from a specific Web source and subsequently train intelligent agents to monitor and extract the desired information.

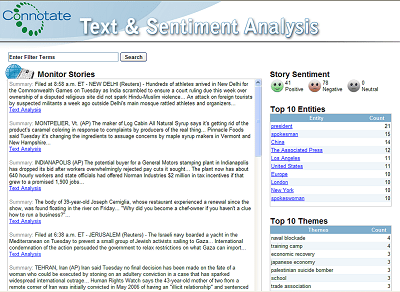

Agent Community enables text and sentiment analysis based on proprietary algorithms for measuring author tone and intent, as well as the perceived slant/bias present in any particular article—a critical component in evaluating customer satisfaction and media perception. Other features include the ability to establish key fields to identify specific data elements, as well as the ability to access extensible business reporting language (XBRL) documents and view them in a structured, usable format.

Agent Community enables text and sentiment analysis based on proprietary algorithms for measuring author tone and intent, as well as the perceived slant/bias present in any particular article—a critical component in evaluating customer satisfaction and media perception. Other features include the ability to establish key fields to identify specific data elements, as well as the ability to access extensible business reporting language (XBRL) documents and view them in a structured, usable format.

The main component of Agent Community is Agent Studio. Within this IDE, users can create, modify, publish, and monitor intelligent agents. Agent Studio uses templates that recognize content “type” patterns to map and define metadata content. This allows mass numbers of agents to be created and deployed quickly.

Agent Community uses a virtual set of process jobs called “intelligent agents” to monitor and collect Web content. These software agents can be customized?or “trained,” in Connotate’s lingo—using a simple point-and-click method to achieve real-time Web data monitoring and collection from internal and external sources. Agent Community uses a patented visual abstraction approach to help users easily identify individual data elements from a specific Web source and subsequently train intelligent agents to monitor and extract the desired information.

Agent Community enables text and sentiment analysis based on proprietary algorithms for measuring author tone and intent, as well as the perceived slant/bias present in any particular article—a critical component in evaluating customer satisfaction and media perception. Other features include the ability to establish key fields to identify specific data elements, as well as the ability to access extensible business reporting language (XBRL) documents and view them in a structured, usable format.

The main component of Agent Community is Agent Studio. Within this IDE, users can create, modify, publish, and monitor intelligent agents. Agent Studio uses templates that recognize content “type” patterns to map and define metadata content. This allows mass numbers of agents to be created and deployed quickly.

Kapow Software

Kapow Software (formerly Kapow Technologies), based in Palo Alto, California (US) and with offices in Denmark, Germany and the UK, has a customer list covering a wide range of industries and organizations, including ESPN, AT&T, and Audi. The vendor positions its application Kapow Katalyst as an enterprise data integration platform that uses browser-based data integration technology (i.e., its patented Kapow Extraction Browser) to extract data from any Web application and process it for integration in the desired platform or format.

Kapow’s integrated development environment (IDE) creates extraction jobs that collect data from the Web, extracting data from external pages (e.g., portals, blogs, and other Web-based content) or from legacy systems for which the only way to extract information is via a Web-based mechanism.

Kapow Katalyst can load or push data from a wide variety of sources without an API, using Web-based technologies to extract data from the front-end and application logic layers, as well as using SQL for the database layer. These features provide Kapow Katalyst with a versatile set of ways to acquire information.

From the business perspective, one of the interesting features of Kapow Katalyst is its ability to access information from cloud-based solutions and social media content, a fast-growing market place in the software industry.

Some of the key components of Kapow Katalyst:

- Design Studio, the IDE that enables Kapow’s product to create, test, and manage rule-based data processes (called “robots”) in a completely graphical way.

- RoboServer, an execution server that enables the operation of all robots performing Web-based extraction, transformation, and integration processes.

- An Extraction Browser to integrate the extracted data with the desired application, whether it be a relational database, an enterprise application, or a cloud-based application.

- A Management Console to schedule tasks, monitor performance, and manage roles and permissions, it can also turn robots into APIs.

Kapow’s capabilities for working with unstructured data are appealing. The application has a complete set of transformation and integration functions to work with strings, numbers, and date/time data types, along with specific abilities to work with transformations that deal with HTML tags and URLs.

Kapow Katalyst is available in various delivery models: on-premise, hosted, or via a software-as-a-service (SaaS) license.

When you subscribe to the blog, we will send you an e-mail when there are new updates on the site so you wouldn't miss them.

Comments